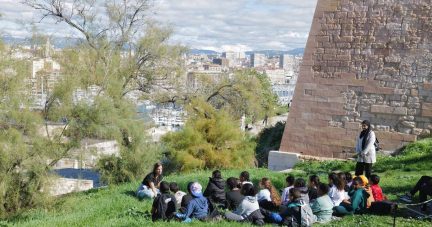

Dilapidated buildings, streets littered with trash, and cheerless residents sporting dirty clothes with holes in them. That’s how the AI-powered image creator Midjourney portrayed France’s suburbs, the banlieues, in 2023, peddling negative stereotypes about the suburban neighbourhoods surrounding French cities.

The disparaging portrayal of French suburbs was the subject of a viral campaign by ride-hailing app Heetch, which invited residents to send postcards to Midjourney’s developers urging them to remove banlieue bias from their AI model.

As the campaign showed, searches for “banlieue school” or “banlieue wedding” revealed startling prejudice, contrasting sharply with images representing non-suburban France.

A discriminatory bias is equally present in AI chatbots, particularly regarding coloured people, women and those with disabilities, says journalist Rémy Demichelis, who has written a book on bias in artificial intelligence systems (“L’Intelligence artificielle, ses biais et les nôtres”).

Such automated discrimination has its roots in the data used by AI systems, which are themselves imbued with stereotypes and therefore biased.

Research into wedding photo databases, for instance, has shown that almost half the pictures come from just two countries: the US and the UK.

“AI feeds off databases that draw largely from Western content and have a specific cultural context,” Demichelis explains. That’s why AI systems might identify costumes from a Pakistani wedding as “folk attire”, because most of the background photos in their databases feature brides in white gowns.

Growing awareness

There is no such thing as a neutral algorithm, stresses Demichelis, noting that “algorithms not only reproduce bias inherent in society but also reinforce it”.

Recognition of this structural bias does not exempt the industry and decision-makers from striving to correct it, argues Jean Cattan, head of France’s Conseil national du numérique, an independent advisory body tasked with exploring the complex relationship between digital technology and society.

“We’re seeing growing awareness of the problem and burying our heads in the sand is not an option,” he says.

Cattan spoke of “concrete improvements” in the better known chatbots, though cautioning that the recent proliferation of AI systems means not all are moderated or subject to the same standards.

“There is now an abundance of conversational assistants that carry the same biases as in the past, or even more serious biases, with relatively little scrutiny of the databases they are trained on,” he warned.

‘Masculine energy’

Databases are not the only reason generative artificial intelligence can produce skewed results, with experts highlighting the role played by engineers who design AI algorithms and models.

“Obviously, if you have only white people, and men in particular, designing these tools, they’ll be less likely to take discriminatory biases into account,” says Demichelis.

The Algorithmic Justice League, a US-based advocacy group, has long warned about unchecked and unregulated AI systems that amplify racism, sexism and other forms of discrimination. It advocates the use of “algorithmic audits” to ensure accountability and mitigate bias.

While such audits are increasingly popular, they remain “poorly defined” and difficult to verify, the group warns. Its recommendations include “directly involving the stakeholders most likely to be harmed by AI systems in the algorithmic audit process”.

US President Donald Trump’s return to power, however, coupled with the tech giants’ pandering to his rhetoric, do not bode well for efforts to tackle discrimination and the lack of accountability in AI.

“When you hear Mark Zuckerberg lament the lack of masculine energy in the tech world, you wonder whether we can expect any positive developments in the industry,” says Demichelis. “All the battles waged in recent years are being called into question, and there’s a risk that discrimination will actually increase.”

Google’s AI debacle

There have been attempts in the industry to correct inherent prejudice – some ending in embarrassment.

Google’s AI tool Gemini was lambasted last year when an ill-designed attempt to correct bias resulted in the chatbot generating images of Asian women in Nazi uniform and a black-skinned US Founding Father.

Viral posts of Gemini’s historically inaccurate images quickly became cannon fodder in a wider cultural war over political correctness, forcing Google to apologise and “pause” the tool.

Elon Musk typically stepped into the fray, branding Gemini “woke” and “racist” even as he promoted his own AI assistant.

Such incidents highlight the difficulty of training AI models to offset centuries of human bias without creating other problems.

“Technically, it’s extremely difficult for designers to pinpoint exactly where the prejudice occurs,” says Demichelis, noting that some form of bias is inevitable.

Europe’s regulatory push

Cattan says improving chatbots will depend largely on user feedback and ensuring companies are required to report on the complaints and other feedback they receive.

The head of the Conseil national du numérique recommends using tools that compare different AI models, such as the Compar:ia platform developed by France’s culture ministry.

He hopes the European Union’s new AI Act, which will come into force in stages over the next three years, will protect users and mark a milestone in regulating the technology.

The Act, parts of which came into effect earlier this month, notably bans “biometric categorisation” systems that sift people based on biometric data to infer their race, sexual orientation, political opinions or religious beliefs.

The world’s first regulatory framework of its kind, the AI Act has been heavily criticised by US tech giants. Facebook’s parent company Meta has already said it will not release an advanced version of its Llama AI model in the EU, blaming the decision on the “unpredictable” behaviour of regulators.

“It remains unclear whether the EU can hold the line” in the face of the deregulatory wave pushed by Trump and Musk, adds Demichelis.

Analysts have warned that Europe is lagging behind the US and China in the global AI race. Its ability to stand up to tech giants may yet shape the future of AI regulation.

This article has been translated from the original in French.

Leave a Comment